Is mathematics included in computer science?

literally, no! technically, yes!

it is always better to start with a question rather than an answer. well, at least that's what I feel, hence the name of this blog.

I know a lot of you are already aware of this delicate dance between maths and machines. but the blog is perfect for everyone to read and be able to comprehend. I have tried keeping it as concise as an explanation to a 5-year-old child. Although the probability of the actual event is very very less 😂

the last time I tried talking about this w/ my much younger cousin - he said w/ a straight face that the m in computer science can and probably stands for mathematics and that the c in mathematics stands for computer science. 🙂

🤔 upon being asked how many letters are there in the term 'mathematics' - he started counting fingers and answered swiftly 11. still not confirmed. but indeed, there is a dance of electrons, bits, and bytes.

so how is maths relevant

let us start with numbers

it is worth taking note, that on the normal days- we represent decimal numbers. the primary set is 0-9 and then follows a similar pattern after 10 digits.

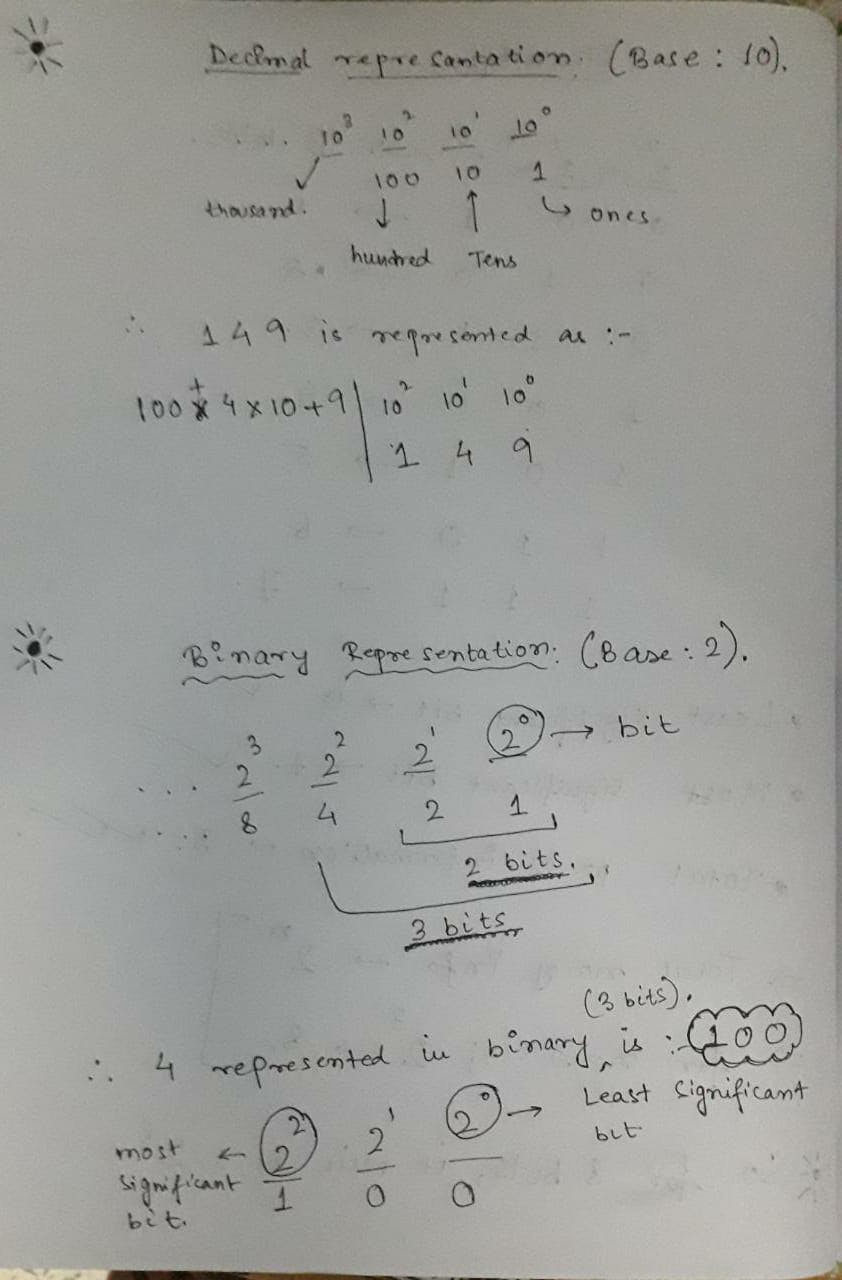

look at the snippet below - to realize the picture

0, 1,2,3,4,5,6,7,8,9,

1(0,1,2,3,4,5,6,7,8,9),

2(0,1,2,3,4,5,6,7,8,9), ...

and take any number - anyone - would be mix and match of these sets. fancy! the single digits are called digit(one digit) and then we have 2 digits 3 digits and so on, it may never end.

but what is 123? what makes it different from 1,2,3

123 is triple digits number. it is interesting how we break down numbers.

- the rightmost (3) is called ones digit since it is at the ones place.

- the middle (2) is at the tens place. to go from a single digit to two digits there are 10 numbers in between.

- the leftmost(1) is the hundred's place. to go from 2 digit to 3digit number the numbers that lie in between are ten times the set of the digits for (ones -> tens), i.e, 10 x 10

so what we do is 100 1 + 2 10 + 3 and read it 1hundred Twenty(2*10) 3.

it doesn't end there it goes to thousands ten-thousands and so on...

we represent decimal numbers with a base of 10.

- ones : 10 ^ 0 = 1

- tens : 10 ^ 1 = 10

- hundred : 10 ^ 2 = 100

so on...

that is great but, your immediate question would be.

but computers are not at all like us?

computers do not understand what we do the way we do. it runs on power. so it has access to nothing but electricity. how is possible?

imagine a bulb - and also that it is connected to a source of power. 💡

provided we have a bulb connected. the only two events that can happen is the bulb(neglecting it may break or whatever) at any time will be either switched on or off. we can consider this off-on as true-false or 0-1.

and the information about the single bulb will be either on/off, better represented as 0/1. O indicating off (false) whereas 1 indicating on(true). since the information outcome has 2 possibilities represented by either 0 or 1 it is also called bits(Binary + Digits)

computer understands binary

the computer only understands 0s and 1s. how does it handle more information besides 0s and 1s?

information sent by n number of bits: 2 ^ n

the number of information a single bit can send is denoted by 2 ^ n.

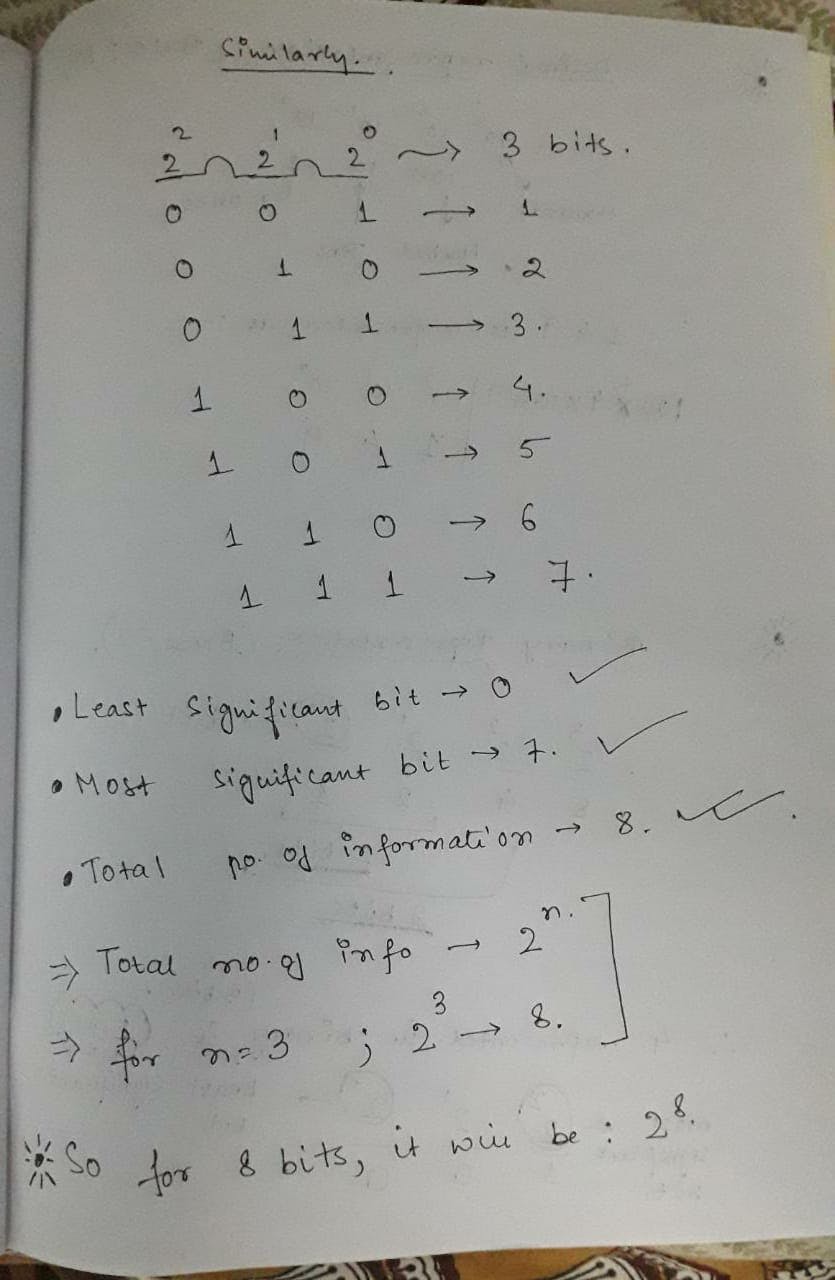

2 bits can send 4 information i.e, -> 0-3.

3 bits can send 2 ^ 3 = 8 information i.e --> 0-7

the computer handles information in a way that is very much similar to decimal we follow :

- instead of a base 10 (decimal) computers use base 2 (binary)

refer to the hand notes below - for a much better understanding ✍ :

how are numbers represented in binary? 🤔 pretty simple - take a look below :

relate each bit equivalent to the bulb we imagined. 3 bits equals 3 bulbs. 8 bits equals 8 bulbs. and 8 bulbs contains information 2 ^ 8 = 256 , i.e, 0-255

the bulbs represent the transistors inside a computer device. which manipulates energy to transfer and handle information. there are billions and billions of transistors in modern machines. 💻

also, it is worth making sure that modern-day machines use 16-bit,24-bit, or even 32-bit processors and follows Unicode. they are faster in the increasing order. a 32-bit processor can process up to 2 ^ 32 information. 🍃

what about characters, texts, images videos?

so far, we have realized how computers communicate at the lowest level and represent numbers as bits.

now, how do computers process images, files, and texts?

the same exact principle: by assigning numbers to characters so as to represent the characters in bits.

imagine representing the letter A as 4, so it would be 100 bitwise in a 3-bit processor and 0000000000000100 bitwise in a 16-bit processor.

that is where ASCII comes into play. it is just a convention of representing characters, special characters, and accents as numbers (hence bits)- adapted for whatever reasons - agreed by all.

the process is pretty much the same for colors as well.

it was observed by combining R(ed) G(green) and B(lue) and mixing portions from them we can achieve all the VIBGYOR colors. 🌈

so just like the letters - the most intuitive answer to 'how does computers process colors, images etc' will be by assigning numbers to represent a particular shade/color on the screen. a single dot on the screen. this, in terms of images, are knows as pixels

in an 8 bit processor - R G B can be represented in terms of 0s and 1s. and the possible forms for each of them lies between 0-255 [since 2 ^ 8 = 256]

and by modulating each of their compositions we can create a new color. mostly called the RGB codes

and it follows the same principle for all other things including files, emojis, videos etc...

conclusion

- 🔢 mathematics, although most of its abstraction lays the foundation of a lot of things including computer science, logic, and so on.

- 😡 do not hate it - innovation and learning are mostly inclusive. meaning on a broader horizon all subjects are linked.

- 😯 humans came up with a lot of conventions to agree on a set of principles - so that the abstraction is consistent.

- 🧏♂️ we define new things using predefined terms. but it has to start somewhere - the very beginning of this abstraction cycle is actually undefined - things like true, false have no specific definition - just another convection by us.

that is it - I hope this was s a great read. any suggestions on how can I improve my blogs or an online hangout are absolutely welcomed.

find me here 🧙♂️

relevant resources 🏖

- if you are starting out with computer science, I would highly recommend taking up the cs50 course by Harvard University. check it out .👩💻 cs50 , David J. Malan

- next up 👩💻 : operation on vectors using programming 💻